Did you forget to walk barefoot on the grass? A Question

A journal of work carried out on pep and nom.

Here I make notes about work I am carrying out on the PEP and NOM system. (September 2019 seems to be the date that I finally had a decent implementation in 'c'). This file shows a long litany of programming work carried out, a lot of it in Colombia probably because time goes slower in that country.

Comments, suggestions and contributions to mjb at nomlang.org

This document serves as a catch-all for 'to do' lists and lists of ideas to implement.

working on the /eg/machine.snippet.tohtml.pss which appears to be more or

less working now. Now I need to add it to the blog.mph functions which

generate the html for this website. After this I plan to work on

/eg/text.tomarkdown.pss so that I can generate a markdown version of

the documents here, and hopefully soon generate a book with some

document system such as quarto or typst

Below is a rudimentary example of what I would like to produce (this

is generated by machine.snippet.tohtml.pss ) But this needs better

formatting.

Machine State Stack word*space*

Work the <era>

Peep EOF

Acc 324

Flag FALSE

Esc \

Delim *

Chars 132450

Lines 1862

Tape cell text mark (size: 500) 0 within { and } >>>> 1 :starting: “first” 2> "the end delimiter"

Partial Program Listing 0> while [class:space] 1: clear 2: testeof 3: ++ 4: -- 5: replace [text:x] [text:y] 6: jump [int:0]

I had some interesting ideas about an image manipulation chat script called

/eg/chat.images.pss which I haven't written yet. My idea is that I should

just start out by writing down some of the phrases that I would like the

script to respond to, and then work out the grammar and semantics from there.

"convert all to jpg"

"crop big images to a square"

"big means|is 100K"

Then I could write underneath how I think the chat-script should parse these phrases according to it’s Tiny Language Model For example

command*set*to-clause*

Also another good idea is using a “todo” state variable which contains a command that was previously constructed from context. Here is an example dialog:

user: convert all images

cs: to what format would you like to convert them?

user: png (set todo="convert to png")

# then execute the todo state variable on the next iteration of the script

The idea of all this is to allow the user to interact 'expressively' with the objects (in this case image files). So the user expresses his or her intention and the script works it out.

I feel, rather grandiosely, that nom should be promoted at conferences such

as splashcon or gophercon or at POPL and other places because I don’t think

people are going to get enthused while randomly trawling the web.

A Bogota street musician called bayron celis played a song called 'Maracas' by

some mexican artist. And he told me about guitars called 'Bucaramanga' which

are made in that city.

random link: the arduino car robot with bluetooth. projecthub.arduino.cc/fractaltech/create-your-own-2wd-arduino-robot-with-bluetooth-control-6f6391

quarto and typst and maybe

even just markdown + pandoc could be sufficient for publishing a book about

the Pep & Nom system. In which case, the top priority should be cloning the

text-to-html script, and making markdown the target.

another list of things to do, in order

/eg/text.tohtml.pss eg: -- will start a sublist and continue

it. But really this will just be a 'second level' list.

/eg/text.totoc.pss which will be a 'preprocessor' for text

files which contain sets of headings. This will convert the headings into

tables of contents, using the same techniques in /eg/toc.pss (i.e. multiple

[toc] labels are allowed) and it will use lists (-) and sublists (--)

to render these tables of contents.

/eg/machine.tohtml.pss or /eg/machine.snippet.tohtml.pss insert

pep machine diagrams in text. The problem is that the system command

is failing in some circumstances in the pep interpreter, so I can't do a

write; clear; add "pep -f eg/machine.tohtml.pss sav.pp"; system; print;to insert the diagram in the text. And

machine.snippet.tohtml.pss needs to

be modified (eof becomes 'when the end of block is reached')

perl translator to render these machine diagrams

latex or epub code. or write a texta-to-markdown script

and then just publish from markdown

Encountered a difficult segmentation fault after recompiling the pep

interpreter. The script /eg/text.tohtml.pss is producing a segmentation fault

on start-up before it even begins to start parsing. Strangely all other

scripts seem to be working normally.

Valgrind tells me that this occurs at /object/pep.c line: 2314

if (inlineScript != NULL) {

// ...

fputs(inlineScript, temp);

// ...

}

which is just saving the inlineScript given to the -i option to a temporary file. But this code should never be executed because it happens even when there is no inline script. So inlineScript should be NULL. I suppose this pointer is getting corrupted somehow.

Was working on a script to insert pep-machine diagrams into the /eg/text.tohtml.pss

output. This would have been very similar to the task that /eg/nom.snippet.tohtml.pss

carries out, but I realised that it is much simpler to user the system

command to insert the output of /eg/machine.tohtml.pss into the html output

at the correct place. This has a big advantage of not requiring an extra

snippet script for this job.

The example script /eg/chat.timeline.pss has got to a useful stage and the

concept of using nom in a kind of chatbot environment has proved interesting.

Another idea is to integrate calls to some AI engine, such as gemini.

Also, fixed a utf8 problem in the perl translator (stdin was not set to

binmode utf8). Added [-_.] to identifiers in /eg/xml.parse.pss

I became bogged down on the /eg/chat.nom.tr.pss and took a quick break

from it. I will now start a new 'green-fields' chat script called

/eg/chat.timeline.pss which will be a chatbot for managing the timeline

scrip

current projects and idea

/eg/text.tohtml.pss

/eg/text.tohtml.pss script. This

/eg/xml.parse.pss

script. I realised, in a moment of lucidity, that all I need to do to add these

characters is create a few new grammar rules such as: “id '.' id -> id

” and “id '_' id -> id” . I think that I have an instinctive aversion for parsing

character-by-character with nom, because I think it will be slow or difficult,

but it isn't either.

# create a data 'list' screen with a search box

search-list {

name: phrase-list; # becomes 'phraseListScreen' in generated code

loader: phraseLoader; # this implements an interface DataLoader

source:

on-item-click: push phrase-detail;

}

widget {

name: phrase-detail;

a: button {

text: "one"; icon: edit;

on-click: push edit-phrase;

}

b: button {

text: "two"; icon: settings;

on-click: push app-settings;

}

# this is a visual layout of the UI elements that are given above.

# it seems to be more intuitive to me, than huge nested lists of Row()

# and Column() but the idea is experimental. ".." means new row. I dont

# know how to do columns

layout:

.. a

.. b

;

}

The above sketches some ideas from a language implemented in nom. The parsing and compiling phrase is fairly simple- in fact, the point of the language is really just to save typing. It should allow functional, 'vanilla' apps with a minimum of coding.

Even the model classes should be automatically created based on the

example JSON text data which is supplied. The json text data could contain

hints about the data types etc.

More ideas and work: In general the chat-loop or chatbot idea for use

with nom has shown much promise. I have been working on an example script

called /eg/chat.nom.tr.pss . This is a direct adaptation of the script

/eg/nom.to.pss which works by accepting a 'plain' English request or command

(with a very limited vocabulary and grammar) and producing the correct

bash commands to translate a nom script into various other languages. The

chat-loop version of this provides a script loop written in ruby, which

accepts input from <stdin> and feeds that input into the nom script.

State is maintained via a properties text file in the format

file=

language=

that=

# etc

ideas

/eg/ language.list.txt this can contain words in English categorised by

parts-of-speech (adjectives, articles, nouns etc) along with one word

translations into various languages.

/eg/ folder.

/eg/text.tohtml.pss by inserting

a marker such as [filelist /eg/] This would use the system command to

insert the output of a different nom script: such as /eg/doc.marker.tohtml.pss

(not written yet)

"word*word* {

clear; get;

"[filelist" {

clear;

add 'pep -f "$PEPNOM/eg/doc.marker.tohtml.pss" -i "filelist ';

++; get; --; add '"';

system; print;

}

}

This /eg/doc.marker.tohtml.pss script could handle various sorts of markers.

It would probably be better to use a parse-token list like

"[*word*file*]*" { ... }

#: languages

"ruby","java","dart",

"python","rust","zig" {

# ... more code

}

I have been experimenting with adding a chat-loop to the nom script

/eg/nom.to.pss The idea is to create a loop that prompts for, and feeds

queries or commands to the script (which is designed to help translate

nom scripts to other languages using a simple English prompt.). One problem

that I have found, is that the messages emitted by nom.to.pss are not

very chatty , or are not appropriate for a chatbot environment.

Another issue is that I have a lot of logic (such as testing for the existence

of the file to translate) in the bash script which is generated by the

nom.to.pss script. This is okay, but I am wondering if it would be better to

put that logic in the nom script itself (using the system ) command.

This goes against my idea of keeping nom as a purely parsing/recognising/compiling

language, but in this context, it seems more logical to put the code

into the nom script.

pep -f eg/chat.nom.tr.pss -i //makechat

./test.rb

One of the points of this chat loop is to maintain context , that is, the context of the conversation. It occurs to me that I can do this simply by reading the state file, and generating parse-tokens from this state file, based on the data recorded there. Below is a conceptual example:

# read state file with system

add 'sed -n "/^server=/{s/^server=//;s/ *$//;p;}" chat-state.txt 2&> /dev/null';

system;

# the server state variable is set so make a parse token

!"" { put; clear; add 'server*'; push; .reparse }

Another important idea is the “maybe*” parse token which serves to indicate that the user should approve a particular action. For example:

"maybe*action*file*language*" {

clear; ++;

add "Shall we "; get; ++; add " the file "; get;

add " to the "; ++; get; add " language?";

--; --; --;

print;

# now get a response with the system command, and exit if

# 'no', but if 'yes', remove the 'maybe*' token and re-parse

# brilliant!

}

I put all the essential pep files on github at github.com/pepnom/pep So now the pep system can be downloaded with:

git clone https://github.com/pepnom/pep

Also, I have been thinking about having a grammar-centric view of software, which I think has potential. Please see the blog for a development of this idea.

I have been working on a language phrase tutor in dart/flutter and

haven't done any more work on pep or nom. I did have the idea to

create a nom script to generate flutter apps. This would be a parsing

experiment where the input would be a json-like format (maybe with

less quotation marks). The output would be a set of dart screens

each reflecting an object in the input format:

screen.user-details {

text: "Please enter your details below",

textbox: ....

}

But this is pretty similar to the flutter format any way.

I haven't been working on the Nom system but have been working on the “four apps” in Dart and Flutter that I mentioned elsewhere. I will probably use Nom parsers and renderers in those apps after translating them to dart.

My work on nom is currently stopped at the stage of creating the AI

code translation script which was only just begun. It would be handy to

create new nom translation scripts like those at www.nomlang.org/tr/

Also, I would like to add the machine diagrams to the website. The

/eg/machine.tohtml.pss script I think is reasonably good but it has to

be integrated into /eg/text.tohtml.pss (using the same techniques as is

used for code listings).

Also I would like to create a latex equivalent of /eg/text.tohtml.pss

so that I can actually create a printable book of some of the nom

documentation. I feel like if I am able to do that I will feel like I

have really “achieved” something with Pep & Nom . Also I could send the

books to people.

I have been having a mini-break from the www.nomlang.org system. But the

major tasks that I would like to finish are the AI translation helper

script, which I have started by can't remember what I called it.

The idea was just to allow easy AI (Gemini etc) translations of the

translation scripts in /tr/ so that many other translation languages

can be added to nomlang.

Other important tasks for the advancement of nom and pep.

/eg/machine.tohtml.pss

latex translator

Idea: A nom self-test script for example

clear; add "abc" !"abc" { ...error }

unescape "a"; !"abc" { error }

# and so on.

This is called /eg/self.test.nom.pss at the moment and is in a very

incipient phase. It has the weakness that the script needs to assume

that some syntax or commands do initially work (such as print

and tests and block s etc)

Idea: a translation template that can be fed into an AI engine to

generate new nom translators. This would be based on the lua/perl

or dart code, or which ever is the currently best translation script.

And it would allow the automatic generation of the new translation

script.

This translation template consists of 2 parts: the code that needs

to be translated, including the pep machine class and methods and the

main() method with command line switches. This code also includes the

snippets that implement the commands such as add Above each

snipped will be a special comment line, that will allow a nom script

or bash script to extract that snippet from the (AI) translated code

and insert it into the template. This first file is the code that will

be translated by an AI engine and the code will be based on a good

existing translation script (eg perl or dart).

The second part is the template itself which contains place-holders for the snippets and the machine class and methods. Then we require some script or code to combine the two parts to create the translated translator. The second part could just be a nom script but it would have to rely on the order of the snippets.

Or I could rely on the system command to insert the found translated

snippets into the template file

# read line by line or word by word

# the snippet file will have the format

# (comment syntax in target language) add-snippet:

# (code to add to workspace in target language) eg: this.work += '; get;

# so the previous line indicates what is coming next

# Tokens: line* lines* nl* snipname*

parse>

pop; pop;

"line*line*","lines*line*" {

# maybe add the nom add command and quotes here ?

clear; get; ++; get; --; put;

clear; add "lines*"; push; .reparse

}

"nl*text*word*","nl*word*word*" {

clear; ++; ++; get; --; --;

"snippet:" {

replace "-snippet:" ""; put;

clear; add "snipname*"; push; .reparse

}

}

# maybe need a lookahead token? so that we know when to call

# the system command to insert into the template file -

# or build the 'sed' command now ?

B"snipname*line".!"snipname*line*".!"snipname*lines*" {

replace "snipname*lines*" "";

replace "snipname*line*" ""; push;

--;

add "sed -i 's/'"; get; add "-snippet:/

++;

# build a sed inline replace here? or build the new translator

# I think build the new translator here in this script is better.

# but the snippets need to appear in the correct order...

# transfer the unknown token attrib here ...

}

Made an inline interpret switch for the nom.toperl.pss translator.

Made the interpret() method of /eg/nom.toperl.pss work, with

minimal testing. It is hard to believe that it works but it does.

The important tasks for pep and nom seem to me to be: todo!

/eg/nom.tolua.pss as well as

the self help system.

perl translation of /eg/palindrome.pss

/eg/nom.toperl.pss and also the

other scripting languages, because it is handy for running in

interpreter mode. (done for nom.toperl.pss)

/compile.pss so that it uses the new

grammar and script organisation from the more recent translation

scripts.

latex translator

/eg/toybnf.pss script so that it can

lex as well as parse

tr/nom.toforth.pss translator to translate nom scripts

into the forth language (with DIY memory management).

Looking at the interpret() method in the /eg/nom.toperl.pss nom → perl

translation script. Fixed a small bug in the input switches.

Probably would be good to add the help system to the translation scripts,

which could also be used to create 2nd Generation scripts with a help word

like /gen2. This would create the parser parser, and maybe run the input

script. This system could use the /eg/nom.to.pss script to generate the

translator with itself.

/tr/nom.toperl.pss This is

pretty remarkable, if I say so myself: it’s a translated translator

that is interpreting itself.

quit command to return the number in the accumulator

as the exit code. This allows scripts to act as pure 'recognisers' for

patterns and formal languages, meaning that they only return zero if

the input is a valid sentence in the given language, or a non-zero

value, if not.

.restart and .reparse bug in begin

blocks for the perl translator.

system command ...

Working on segmentation faults and memory leaks in the pep interpreter.

The 'until' code in the interpreter is bad, but that may not be the

only problem. I may have fixed the until code and untiltape

code but valgrind still complains about unitialised value at line 560

in /object/machine.interp.c in the strcmp() function. I think this is

because for some reason the instruction ii->a.text parameter is not

valid.

Added the accumulator as an exit code for the quit command to

the pep interpreter. Desultory testing.

Working on /eg/machine.tohtml.pss which is supposed to create a nice

visual (html) representation of the pep machine, tape and (compiled)

program listing.

I am not sure of the point of this massive ongoing coding effort, but the system still seems potentially revolutionary.

Did lots of interesting work on /eg/timeline.tohtml.pss Added self-testing

and translation and reformed the grammar.

The token reduction technique in /eg/timeline.tohtml.pss is really

quite amazingly good. It gets rid of, or 'evaporates' component parse

tokens when they are out of context, meaning that they don’t form

part of the larger pattern. This technique is much more flexible and

succinct than previous methods I have used, and will become a

standard parsing technique.

It also means that there is no reason not to add lots of new parse tokens (eg for punctuation) because it is easy to get rid of them when they are not longer wanted.

Started to add the self-help, self-translate and self-test to the

script /eg/grammar.en.pss which is an adaptation of /eg/natural.language.pss

Also, improved the self-testing in /eg/text.tohtml.pss but I am starting to

get lots of segmentation faults when I add to this script (which is now

pretty big). These are thrown by the pep interpreter (which is written

in plain 'c' so that is probably not surprising). I need to hunt down

what is happening.

I tried to make file links appear as <code> style. So /eg/index.txt should

look use a code font and so should /tr/index.txt

Also adding all the selfs to /eg/maths.parse.pss and it all seems to

work nicely, including creating and opening a pdf from a random

test input with the help word /test.line.pdf

Added self-translation, self-testing, and self-help to the old script

/eg/json.check.pss It seems to be working well and is now probably the

model to copy for adding this to other scripts. With a simple phrase like

pep -f json.check.pss -i /toall

We can translate this script to all the available nom translation languages

(eg [nom:tr.links] ) But that is not all! We can also reformat to

latex/pdf/html with pep -f json.check.pss -i /topdf etc This is possible

because of the script /eg/nom.to.pss and because of the different translation

scripts and formatting scripts in the /tr/ and /eg/ folders.

working on the 'all' method for the script /eg/nom.to.pss which translates

a nom script to all languages for which there is a translator. It

appears to be working.

Some ideas for the system:

until with multiple end delimiters

wrote the script /eg/tocfoot.tohtml.pss which demonstrates adding a

table-of-contents and a footnote list to a document using markers such

as [toc] and [foot]. The script is a variant of /eg/toc.tohtml.pss but

is much better because the index lists can be placed anyway in the

document with the markers, and they can also be repeated. The script

uses the same technique of building the lists in mark ed taped cells

at the top of the tape .

read;

"#" {

while [#]; B"##" {

clear; pop; "nl*" {

push; until "\n"; put;

clear; add "heading*"; push; .reparse

}

# '##' doesnt start the line, so its just a normal word.

push; whilenot [:space:]; put;

clear; add "word*"; push; .reparse

}

}

The pep interpreter still throws the occasional segmentation fault

when adding stuff to the /eg/text.tohtml.pss file I wonder if that has

to do with program size limits? Because that script is pretty big

now.

I wrote the script /eg/nom.to.pss based on the code in

/eg/xml.parse.pss . I could also make this a testing script as well

using the existing code. This script responds to very simple sentences

like 'translate eg.pss to ruby' or 'to ruby eg.pss'

The advantage of having this script, is that I can now include it in the help system of all other scripts in order to provide 'self-translation'

Thinking about the etymologies idea for the text to html formatter, but I would like to style the pop up to display the etymologies in 2 columns.

Ideas and things to do.

until

until ")","\n";

while command can do that but

then you have to read the extra character and consume it.

This introduces problems with reading on EOF (which will

exit silently and cause considerable head-scratching when you

are trying to debug).

system command. This needs to be put into the

pep interpreter and all the translators

perl translator /tr/nom.toperl.pss so that it can become

an interpreter and then use that code as a model for ruby, python

etc.

Added a ligature for æ words like æroplane or gynæcology. archæology,and julius cæsar.

Experimenting with substituting ligatures for character sequences in

/eg/text.tohtml.pss not for any practical purpose I can think of.

"yolngu" "yolŋu" this is a phonetic spelling for the australian

language which is used in some texts..and Bandjalang.

yuŋga - to go, to walk

jaŋga - to sing

muŋga - to sleep

ŋuŋgi - to sit

ŋali - we (dual, including the person being spoken to)

ŋa:wih - a long way

ŋari - a person, a man

ŋami - a mother

Academy" "Ακαδημία"

"Acoustics" "Ακουστική"

"Acrobat" "Ακροβάτης"

"Alphabet" "Αλφάβητο"

"Angel" "Άγγελος"

"Atmosphere" "Ατμόσφαιρα"

"Catastrophe" "Καταστροφή"

"Chaos" "Χάος"

"Climate" "Κλίμα"

"Cosmos" "Κόσμος"

"Democracy" "Δημοκρατία"

"Ecstasy" "Έκσταση"

"Economy" "Οικονομία"

"Genesis" "Γένεση"

"Horizon" "Ορίζοντας"

"Myth" "Μύθος"

"Planet" "Πλανήτης"

"Rhythm" "Ρυθμός"

"Sphere" "Σφαίρα"

"Telephone" "Τηλέφωνο"

"cooperate" "coöperate"

"naivete" "naïveté"

"reelect" "reëlect"

"aerie" "aërie"

"Noel" "Noël"

"Chloe" "Chloë"

"Zoe" "Zoë"

"Moeller" "Moëller"

"Curacao" "Curaçao"

Umlaut (in borrowed German words):

"uber" "über"

"doppelganger" "doppelgänger"

"fuhrer" "Führer"

"gemutlichkeit" "Gemütlichkeit"

"schadenfreude" "Schadenfreude"

fi (f + i)

ff (f + f):

unusual spellings

"fairy" "faerie"

"fairy" "færie"

"eon" "æon"

"aetiology" "ætiology"

"encyclopaedia" "encyclopædia"

"archaeology" "archæology"

"mediaeval" "mediæval"

"primaeval" "primæval"

"caesura" "cæsura"

"Caesarean" "Cæsarean"

"anaemia" "anæmia"

# just the base of the word

"anaemi" "anæmi"

"faec" "fæc"

"faec" "fæc"

"diaeresis" "diæresis"

"diaresis" "diæresis"

"dieresis" "diæresis"

"leucaemia" "leucæmia"

"paediatrics" "pædiatrics"

"orthopaedic" "orthopædic"

œ Ligature Words:

"foetus" "fœtus"

"foetid" "fœtid"

"fetid" "fœtid"

"gynaecology" "gynæcology"

"homoeopathy" "homœopathy"

"oesophagus" "œsophagus"

# just the root of the word

"oesophag" "œsophag"

"Oedipus" "Œdipus"

"diarrhoea" "diarrhœa"

"oeconomics" "œconomics"

"manoeuvre" "manœuvre"

"phoenix" "phœnix"

"amoeba" "amœba"

ideas:

system command (1 aug 2025: not implemented).

/eg/text.tohtml.pss so that the

pattern <P> at the start of a line will be converted to a

drop capital (within the paragraph text).

/eg/machine.tohtml.pss which converts a plain text

representation of the pep,nom machine (with a part program list)

into html, or postscript or latex etc or EPUB.

Partial Program Listing (size:1390 ip:106 cap:20000)

103: testclass [class:alnum]

104: jumpfalse [int:7]

105: while [class:alnum]

106> put

107: clear

108: add [text:name*]

--------- Machine State -----------

(Buff:16/39 +r:0) Stack[starttag*</*] Work[book] Peep[>]

Acc:0 Flag:TRUE Esc:\ Delim:* Chars:12 Lines:1

--------- Tape --------------------

Tape Size: 500

4/10 ) 0 [book]

4/10 ) 1 [book]

0/10 ) 2> []

0/10 ) 3 []

/eg/toc.tohtml.pss which creates an html table of

contents from a plain text document. The script would be called

/eg/tocfoot.tohtml.pss and would allow the inclusion of one or more

[TOC] and [FOOT] markers in the source text. These markers would be converted

to a table of contents and a list of footnotes respectively.

# name and reserve 2 cells at the top of the tape for

# the table of contents and footnote list.

begin { mark "toc"; ++; mark "foot"; ++; }

whilenot [:space:];

!"" {

put; lower;

"[toc]" { clear; add "toc*"; push; .reparse }

"[foot]" { clear; add "foot*"; push; .reparse }

clear; add "word*"; push; .reparse

}

# .... here actually build the toc with headings and the

# .... foot with footnotes.

while [:space:]; clear;

parse>

pop; pop;

"word*word*","text*word*" {

clear; get; add " "; ++; get; --; put;

clear; add "text*"; push; reparse

}

# this code should allow multiple table-of-contents in one

# document.

(eof) {

"text*toc*" {

clear; get; mark "here"; go "toc"; get; go "here"; put;

clear; add "text*"; push; .reparse

}

"toc*text*" {

clear; ++; get; --; mark "here"; go "toc"; get; go "here"; put;

clear; add "text*"; push; .reparse

}

"text*foot*" {

clear; get; mark "here"; go "foot"; get; go "here"; put;

clear; add "text*"; push; .reparse

}

"foot*text*" {

clear; ++; get; --; mark "here"; go "foot"; get; go "here"; put;

clear; add "text*"; push; .reparse

}

"foot*toc*" {

clear; mark "here"; go "foot"; get; go "toc"; get; go "here"; put;

clear; add "text*"; push; .reparse

}

"toc*foot*" {

clear; mark "here"; go "toc"; get; go "foot"; get; go "here"; put;

clear; add "text*"; push; .reparse

}

}

push; push;

Working on /eg/timeline.tohtml.pss which seems to work without any

huge amount of testing. It can also test itself with:

pep -f timeline.tohtml.pss -i /test | bash

But this script doesn't have any self-translation facilities (unlike

the xml.parse.pss script). I wrote a separate script that

translates scripts to different languages (based on the code in

/eg/xml.parse.pss ) and then calls that from within the script help-words

This is called /eg/script.translation.pss

script ideas

/eg/timeline.tohtml.pss

/eg/toc.pss and seems to work.

/eg/toc.tohtml.pss which converts a list of headings

into a html table of contents with links. This is somewhat useful and

it contradicts what I have assumed for a few years: That if I use

the 1st few cells of the tape as a kind of buffer array for saving

values, then the normal script operation will interfere with, or

overwrite those cells.

But this, I think, isn't true because basically, the pop and

push commands are designed to do nothing on an empty stack

and an empty workspace buffer respectively. This is important in

the context of writing a type-checking parser, where the parser

is trying to verify that a variable is being assigned the correct

type of variable for example

x = "hello";Now if 'x' has been defined as an integer, our language may wish to complain bitterly about trying to sausage-in a piece of text (string) into our 32/64 (whatever) bit piece of memory.

The key to this, when using Pep & Nom as a parser, is maybe to save the type of each variable in the top tape cells. However there are some significant additional problems: scope (which maybe doable with some kind of dot notation) and also the fact we have no idea how many variables need to be checked (unless we do 2 passes) and therefore don't know how many tape-cells to allocate for this purpose.

The /to<lang> word seems to work for rust/dart/go/java and compiles, and the translated script can be tested with /testone<lang>. Also with lua/ruby.

I have been working on the /testone <lang> help word in the

/eg/xml.parse.pss (limited) xml recogniser. This word, combined with

the /to <lang> help word (which translates the script to another language)

tests the script, or a translation of the script with some sample xml

input. The system seems very useful and transferable to other scripts.

It is probably even worth-while extracting it to a separate nom script

which I will call something like /eg/discuss.nom.pss

The discuss.nom.pss script is supposed to be a “Tiny Language Model

” (a TLM) which can receive phrases like

xml.parse.pss into ruby'

/eg/nom.to.pss can do this but not

with the 'it' words, at least not yet)

So, the TLM, powered by Pep & Nom will accept simple English sentences

and parse them into actions or queries. Words like 'it' 'that' 'this'

require some state to be saved and this will be done by nom using

the system command (or maybe read!) which will execute a system

command and read the result of the command into the workspace.

Fixed a couple of bugs in /eg/xml.parse.pss

I seem to have fixed the “.reparse with begin block” bug. But only for the

lua and rust translators. Now I have to fix it for the other translators.

My help system in the scripts often uses a .reparse within a begin block to

trigger the help system when an empty document is found, or an invalid first

character.

Discovered that the .reparse command does not work within the begin

blocks for some translators. This is because .reparse either

uses a “goto” or a break; or continue; command. The break; command won't work

because the begin {} block is completely separate to the lex and parse

blocks.

Found bugs in /tr/nom.tolua.pss and /tr/nom.torust.pss when translating

/eg/xml.parse.pss and /eg/text.tohtml.pss This was caused by

the .restart and .reparse commands in a begin block

Scripts could also test themselves.

Done:

/eg/nom.to.pss a nom script that accepts instructions like

“translate file.pss to ruby” and will carry out the instruction or

else just print out the necessary commands.

/eg/timeline.tohtml.pss the same but for an historical timeline.(done)

system command which is a new command that reads a

shell process into the workspace. (not implemented)

help* token) it is possible to put a /to<lang> help word that will

translate the current script into another language. (DONE)

faq.pss translate a simple FAQ text format into html

text.toman.pss create a man page for a document

/eg/text.tohtml.pss

This seems very promising and could be added to any significant script.

I seem to have abandoned the ideas and todo files of this documentation and will probably just put everything here.

Added the beginnings of a help system to /eg/text.tohtml.pss

Had the idea of a TLM a “Tiny Language Model” , which is just a

nom script which parses simple commands and then possibly executes

it. Also, a “random” command, which selects a random section of text

between 2 delimiters.

I like the idea of implementing help/error tokens in even simple

scripts. These help systems can also be triggered by keywords at the

start of the 'document', possibly wrapped in comment syntax for the

output language. I will try to add such a help system to

/eg/text.tohtml.pss

Added some ideas to the /eg/natural.language.pss script and also

add an example phrase grammar to the comment section.

I had the idea that a simple natural language parser could be used to

generate frequently used bash commands such as “reducing the size of an” image", “making an image black and white” , and so forth. But this would

probably have to be combined with some repl loop written in dart/rust/ruby or

anything else to actually execute the commands. So the nom script would

parse the input and generate the bash command or commands, and then the

script written in ruby would actually execute the commands. The ruby

script could also handle reference words like “that” or “this” which would

refer to filenames that have previously been mentioned. This requires

remembering state in a way that would be difficult, if not impossible in

pep/nom.

choose an image to edit

choose image

show it's dimensions

show dimensions

shrink it by half. Shrink by 50%

make this black and white.

This idea came from a simpler one: given a command like “translate to ruby

” a nom script will generate the bash commands necessary to translate a

nom script into the ruby language. From there is seems an easy step to

actually execute the commands...

revised the /eg/flyer.typewriter.tohtml.pss script to include an

error* and help* token. Thought about presenting the idea of Pep & Nom

at some language conference. O'reilly's “Emerging Languages” Conference

would have been ideal (even though nom is not emerging anywhere) but

that conference is no longer.

I wrote a simple letter-to-html formatting script called

/eg/letter.typewriter.tohtml.pss which may be interesting because it

includes it’s own help system with documentation. This seems a nice

way for the script to be “self-documenting”.

I haven't been doing any work on the nom system except to write a

somewhat dodgy script at /eg/script.tag.pss which is supposed to remove

html <script> tags from an html document. It could be described (charitably)

as “naive”.

Also, it alerted me to the problem of trying to use

the until command in a case-insensitive manner: i.e. it is not

possible and makes the until command not very useful for case

insensitive language patterns.

I have been looking again at Dart/Flutter with the idea to create a kind of “sociedad” in the spanish sense of the word. Or a “company” of Colombian programmers for the creation of apps. 2 app ideas that seem useful to me are: A language learning app with an emphasis on listening to phrases and only viewing the transcription and translation occasionally. So the app would be “audio-biased” and would implement several of my ideas for language learning. The other app idea is “LocalPaper” which is a kind of simple-ish blog and writing app which would allow the creation of a local paper or newsheet. This would include the usual sort of blog or writing functions but also a way to produce a printable (pdf/ps) newsheet that could be put in local cafes. The newsheet could contain local events, local education courses, local classifieds and some news and articles. The local paper could also be published to the web, if desired so the rendering engine would support html and pdf/ps/LaTeX

I like these ideas. The local paper app could include a compiled

nom script which would render to html and pdf/ps (via latex

possibly).

NOTE: I abandoned the 'flag' register idea, because I can just use

a mark ed tape cell as the 'flag' and then access it with

mark 'here'; go 'flag'; get ; go 'here' ;

etc etc. This works fine in /eg/toc.tohtml.pss and in fact I don't

know why I thought it wasn't going to work. The top tape cell is

protected from being overwritten after pop by the design of

the pop command.

it would be nice for text.tohtml.pss to be able to include a float

right map, or map-tile image. I could use the same format as for

images but the image would be an OSM map.

NO gave up this idea, see above

Also, I think a “flag” register would be good, which could be used for states like “in.comment” etc. It could work like this

clear; add "in.comment"; flag; clear;

# now check the text in the flag register

clear; tell; "in.comment" {

clear;

# do parsing specific to comment blocks

}

At the moment I use the accumulator register with the zero

etc commands. But the accumulator was really designed for

counting stuff, not keeping track of parsing state.

I think I will try to read some compiler books to give me some context for my work on pep/nom: Here is the list from Bob Nystrom's twitter post

Just started working on the nom ↦ zig translator. I used an automatic

translation from google gemini to translate the rust machine struct and

methods into zig. I am not sure how accurate it will be. Zig could be

a quite big learning curve because all memory is explicitly allocated

and deallocated. I still haven't fixed the interpret() method in

the nom ==> perl compiler but will get round to it at some stage.

The Ruby and Python translators could also have an interpret() method

because, apparently, they can both execute a string as code.

fixed the escape and unescape methods in /tr/nom.tolua.pss /tr/nom.torust.pss

and /tr/nom.toperl.pss so that the escape character is escaped properly.

Also, in /object/machine.interp.c and /object/machine.methods.c

rewrote the escapeChar() method in the perl translator to fix a

bug where the escape character (by default \\) was not being escaped

properly. This code needs to go into all the translators and

the interpreter and also probably into unescapeChar method.

All first generation tests in the perl translator are now working.

But this script probably just works on code points not grapheme

clusters using utf8

working on the perl translator /tr/dev.nom.toperl.pss or /tr/nom.toperl.pss

which is based on the rust one.

The rust translator is now working well with first and second generation

tests all working as well as being able to translate big scripts

in the example folder. The translator is still a unicode

code-point translator (not grapheme clusters) so that is a limitation,

but converting to grapheme clusters is not a big job using a rust

crate (external library).

I am always surprised when second generation tests actually work, but they do, and it always seems remarkable.

Also, I discovered that the google gemini AI engine can actually

translate the pep machine very well into other languages if it is

given a good script to work with (eg the rust translator) and I will

use it to speed up the work of translating to other languages like

perl, haskell, swift, R, julia, and lisp.

Working on the rust translator /tr/nom.torust.pss I am writing it as a

unicode code-point parser. If I get it working well I will write another

version for unicode grapheme clusters. The rust version has taken a bit more

time because the compiler is strict and there is lots of “illegal borrowing

” marlarkey.

Adding some utf8 support to the /tr/nom.tolua.pss translator.

Also, the lua reference manual

has a complete ebnf syntax for the language which may be interesting

for me to try to implement in nom.

Some debugging of the lua translation script which seems to be

working quite well.

/tr/nom.tolua.pss is working for tests in /tr/translate.test.txt

/eg/text.tohtml.pss script

which should render like this

rust |

dart |

perl |

lua |

go |

java |

javascript |

ruby |

python |

tcl |

c

Wrote /eg/text.snippet.tohtml.pss which can work with /eg/nom.tohtml.pss

to render a nom script into html with the doc header rendered as a

document in the same format as these pages. also wrote a bash

function which assembles this into a page, which is in the

blog.sh script

I just realised that a simple way to ensure there are at least 2 parse tokens in the workspace is:

E"*word*",E"*text*" {...}

The leading “*” in the ends-with test means that there must be at least 2 tokens.

Doing some work on the documents in /doc/ Added the “echar” command

to the machine, but not tested and not added to bumble.sf.net/books/pars/asm.pp

(but has been added to bumble.sf.net/books/pars/compile.pss ). Fixed very minor

issues in /eg/text.tohtml.pss with quotes and emphasised text.

need to check the stack and unstack commands in the

file /object/machine.interp.c to make sure that they update the

tape pointer.

I have been editing some documents in the /doc/ folder and created

a system for including random quotes at the beginning of each page.

This uses the /eg/quotes.tolua.html.pss which extracts all the

quotes from /doc/misc.quotes.txt and creates a lua “table” (associative

array) and a short lua script to get one random quote from the

array. The blog.rq function in /webdev/copy.blog.sh will make this

lua script from the quote file.

This is a pretty convoluted way to achieve this, but it seems to work.

The lua translator is getting better but I have some difficulties

with how to read or consume the input-stream/file/string etc.

I have been splitting files into lines but it is probably better

just to read the whole file into a variable and then read the

input buffer 'character' by 'character'. One problem is that there

is no such thing as a character, at least in the view of unicode.

A unicode character can consist of a sequence of codepoints which

is a base character or glyph combined with 'combining marks' (diacritics

emoji characters etc).

The script /eg/brainfork.c.pss seems to be working well with

multiple commands reduced to one with parsing techniques and

using the accumulator register. 'Brainfork' is my name for the

brainf**k esoteric language.

May have finally fixed the escape/unescape bug in

bumble.sf.net/books/pars/object/machine.interp.c where a character was escaped by the

escape command even when it was already escaped. Also, updated in

bumble.sf.net/books/pars/object/machine.methods.c but not tested. Also added mark; and go;

syntax to machine.interp.c without testing need to add the same to

machine.methods.c

having another look at /eg/brainfork.c.pss which is an experiment in

reducing multiple brainfork commands to one. Eg +++----

can become - or >>>><< can become >> . This is a simple

optimisation in the code production phase of the nom script.

I use list tokens to do this but it complicates the script grammar

because you have to lookahead to see what the next token is.

still working on the lua translator, simple scripts working.

Decided I need a 'reserve' command for the machine which will reserve

a section at the top of the tape array for use as a kind

of symbol table. Type checking, scope, type inference etc seems to

be the last big hurdle that nom has in order to be a fully fledged

compiler compiler or parser parser.

Starting to work on the nom ↦ lua translator . I built and installed

lua from source which was easy and fast. At the moment the

dart translator seems to work well, but still has a bug with the

output when parsing a string. Some dart StringBuffer issue.

All first generation tests are working with /eg/nom.todart.pss

from stdin input. I would like to allow file and string input and

output as well, to make the script useable from within other

dart code. I will start to work on /eg/dev.nom.torust.pss at least

to try to get something to compile.

Working on the dart translator nom.todart.pss or dev.nom.todart.pss

Adding unicode categories,scripts, blocks to RegExp matching

with while whilenot and class tests

eg: [:Greek:] will match greek letters. Dart seems to support all

categories, scripts etc. But do they clash?

Have been struggling with getting some rust code to compile in

the /eg/nom.torust.pss Will probably have to comment out a lot of

things.

Doing work on the rust translator /tr/nom.torust.pss

Looking again at the remarkable work of fabrice bellard and his refreshingly simple html. Life is too short for html and css. But I do it anyway.

Had the idea that nom could also be used for binary files. Matching binary patterns it also useful. For example UTF8 text.

Also, I need to rethink the whole approach to Unicode and grapheme

clusters, which are sets of Unicode code points that amalgamate to

form one visible character (eg certain letters with accents).

This is a tricky issue. Nom should normally read one cluster at

a time, not one Unicode code point at a time. But there may be

rare cases where we want to get one Unicode char at a time.

So the default behaviour of read should be read one cluster

like the dart characters api. But there could be a variant read command

that reads one Unicode character (Rune?) and also maybe

one byte char. The byte-read command could be used for parsing

binary files....

Have been reading about PEG parsing expression grammars which seems

to be what nom is good at parsing, with some caveats.

Playing with more formats in /eg/text.tohtml.pss eg No 123

and maybe horizontal bar-charts. which could just go in lists.But

you probably need a table to line up the starts of the bars.

- [bar:nom:42/100:%]

- [bar:lua:12/100:%]

- [bar:wren:54/100:%]

The % is a unit name and the 42/100 is a bar width. This number should be printed inside the bar at the end. “nom” lua etc are the labels printed before the bar. Need to calculate width in em or ex as below. But percent is relative to container.

/* calc(expression) */

calc(100% - 80px)

/* Expression with a CSS function */

calc(100px * sin(pi / 2))

/* Expression containing a variable */

calc(var(--hue) + 180)

/* Expression with color channels in relative colors */

lch(from aquamarine l c calc(h + 180))

<table class="chart">

<tr>

<th>wren</th><td>

<div class="chart-bar wren" style="width: 14%;">0.12s </div></td>

</tr>

<tr>

<th>luajit (-joff)</th><td>

<div class="chart-bar" style="width: 18%;">0.16s </div></td>

</tr>

<tr>

<th>ruby</th><td>

<div class="chart-bar" style="width: 23%;">0.20s </div></td>

</tr>

<tr>

<th>python3</th><td>

<div class="chart-bar" style="width: 91%;">0.78s </div></td>

</tr>

</table>

still working on the /eg/nom.todart.pss which is now working with

about 3 commands and <stdin> Added No abbrevation to text.tohtml

The script /eg/nom.tolatex.pss is now working more or less ok.

The colours are not great, but that is easy to change. I invented

a new and interesting parsing technique for escaping special

LATEX

characters and styling each component of a

NOM script.

Added some abbreviations to vim about translation to go, and

also a shortcut in /eg/text.tohtml.pss for links to translation scripts

like this

rust |

dart |

perl |

lua |

go |

java |

javascript |

ruby |

python |

tcl |

c

and also a list

like this [nom:translation.list]

working on the /eg/nom.tolatex.pss script which has proved tricky

because I couldn't use the listing package nor the minted package

for code listings so I had to write my own formatter. which seems to

be working.

The script /eg/nom.tolatex.notunicode.pss does print nice

NOM

code listings but can't handle any unicode characters in the

source, which is silly.

Finished the script /eg/nom.tohtml.pss which prints colourised

NOM in

HTML using <span> tags. Also wrote /eg/nom.snippet.tohtml.pss

which only pretty prints withing <code class='nom-lang'> tags.

This allows it to work with /eg/text.tohtml.pss

I reformatted most of the documentation with the new pretty printed NOM example code. Also just plain file names that start with / get linked in html now. Also made a new codeblock syntax starting with ---+ and ending ,,, which is specifically for nom code.

Added unordered lists to eg/text.tohtml.pss . The parsing was sort of

bizarre because there is no start token for an unordered list in my

quirky plain-text document format

Lists are just started with a list-item indicator which is a dash word

'-' which starts a line. Lists are terminated with a blank line or

the end of the document, so I reduce the lists in reverse: that is,

when I find the end of the list I add an endlist* token and then

reduce all the item*text*endlist* sequences and keep reparsing

until there are none left.

I was sort of surprised that it works, but it seems to.

/eg/text.tohtml.pss

list* token live for a bit longer and

parse starline*list sequences, just like I do for block

quotations.

<:0:4:>>:/image/name.gif> or <name.gif>

Added equationset* token to maths.tolatex.pss This script is producing

really nice output from simple ascii arithmetic expressions (Unicode

symbolic expressions should work when the

NOM script is translated

to go or

JAVA etc. add more

LATEX symbols like greek letters.

Still don’t have derivative and partial derivative symbols.

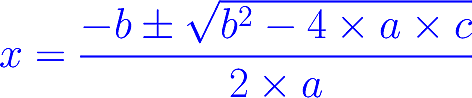

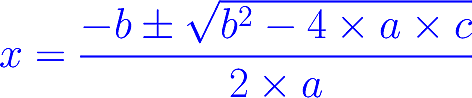

pep -f eg/maths.tolatex.pss \

-i 'x == (-b :plusminus sqrt(b^2-4*a*c))/(2*a);' > test.tex

pdflatex test.tex;

# see below for rendering

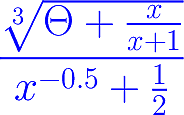

pep -f eg/maths.tolatex.pss \

-i ' cuberoot(:Theta + x/(x+1))/(x^-0.5 + 1/2) '

# see below for rendering with pdflatex

made abbreviation code in eg/nom.syntax.reference.pss much better. also

added a help system (but still need to write it)

Made a template script eg/nom.template.pss which has an error and help

token and a parse-stack watch and some common parsing code

Looking at making some html colourized output. The css is in

/site.blog.css I inspected elements at the wren.io site for ideas.

Added comments in documents (lines that start with #: ) in

eg/text.tohtml.pss

Yesterday, I created eg/maths.to.latex.pss which transforms

formulas like x:=sqrt(x^2+y^2)/(2*x^-1.23) into really nice

LATEX formatted mathematics. I am impressed with my own

work. It works.

I also invented the following lookahead rule which does a lot of work. This is a positive grammar lookahead rule, because it reduces a set of token sequence if any of the sequences IS followed by ) or , or ; Because it is a positive rule I can combine several sequences into one rule.

e := e op.compare e | e op.and e | e op.or e

(LOOKAHEAD 1: ')' | ',' | ';' )

The bracketed expression means only perform the reductions if the following parse token - in this case a 'literal' value - is one of the 3 alternatives.

# when surrounded by brackets or terminated with ; or ,

# eg: (x^2 != y) or x && y,

B"expr*op.compare*expr*",B"expr*op.and*expr*",B"expr*op.or*expr*" {

!"expr*op.compare*expr*".!"expr*op.and*expr*".!"expr*op.or*expr*" {

E")*",E",*",E";*" {

replace "expr*op.and*expr*" "expr*";

replace "expr*op.or*expr*" "expr*";

replace "expr*op.compare*expr*" "expr*";

push;push;

# assemble attribs for new exp token,

--; --; add " "; get; ++; get; ++; get; add " "; --; --; put;

# transfer unknown token attrib

clear; ++; ++; ++; get; --; --; put; clear;

# realign tape pointer

++; .reparse

}

}

}

I have been reforming and developing the arithmetic expression

parser which is now called eg/maths.parse.pss It now includes

good error handling and a help-text system (for explaining the

syntax of the expression parser). I also added an assignment operator

(:=) logic operators (&& || AND OR) comparison operators

(== != < <= > >= etc) and functions like sqrt(...)

It is now basically a template for how to write a language with

NOM and it also forms a reasonably big chunk of any kind of

computer language parser/compiler. It can be more or less

cut-and-paste into other scripts.

Things to do on the PEP and NOM system.

translate.perl.pss using the new syntax at

eg/nom.syntax.reference.pss

nom.syntax.recognise.pss based on script above

which just says “yes: nom syntax ok” or “no: etc” This script would

become the basis of an non-error checking nom parser.

eg/drawbasic.pss

text.tohtml.pss

eg/ to html with text.tohtml.pss

text.tolatex.pss for printing a book

Starting to write the parser for a simple

LOGO ish drawing

language. The language is /eg/drawbasic.pss but I hope

to change that name if the language gets good. ♛

Also, I was working today on /eg/nom.to.listing.pss

which is supposed to be a simple precursor to a

nom.to.html.pss html pretty-printer.

Have uploaded some solutions to problems at the www.rosettacode.org

site. The

NOM syntax checker nom.syntax.reference.pss is close

to complete. I will use this script as a "reference* for what

the syntax of nom is and should be.

Need to think about the mark and go syntax. It might

be better to use the workspace value as the mark I am not sure.

I will no longer permit ridiculous ranges in classes

silly ranges: [\n-\f] I think in the

PEP interpreter these

are currently accepted, but what does it even mean? I will just

allow simple ranges like [a-g] Also, I need to remove '<' and

'>' as abbreviations for ++ and -- because they

clash with <eof> etc. Also, remove 'll' and 'cc' as aliases for

chars and lines .

Have been working on the /eg/nom.syntax.reference.pss which is a syntax

checker for the

NOM script language. I have made good progress

and have added some new parse tokens, such as statement* and

statementset* (for a list of statements). And command* is now just

the command word like add or push and not the

whole statement. “eof” and “reparse” are parsed as the word* grammar

token and reduced later to test* and statement* later.

Trying to write an nom error checking script without adapting

an existing translation script. So I am writing it

from scratch and in the process I am discovering strange things about

the existing grammar that I have been using. For example I use

'>' and '<' as abbreviations for ++ and -- but this

clashes with the <eof> syntax . So I will remove these abbreviations

and also the '+' '-' abbreviations for a+ and a-

because it is really silly to have 1 character abbreviations for

2 characters commands.

Also, I should have a command* token for add clear

upper for example and then a statement* token for

add "hi"; replace "x" ""; clear;

Also just use a word* token for “eof” reparse etc. Things that are

not valid commands or tests but part of.

I made symlinks for the bumble.sf.net/books/pars/tr and bumble.sf.net/books/pars/eg folders on this site so that the example scripts and translators will also be available here. And I will create a document index for them.

Yesterday I wrote quite a large chunk of an

XML parser

(it is still in a documentation page). I was

surprised how easy it was. I thought xml parsing was going to be difficult

because it seemed to have multiple levels of nesting Firstly on an internal

tag-level (a list of attributes withing the tag) etc.

I also discovered some new techniques for error checking and reporting. For

example: look for a parse-token which is at the end of a sub-pattern, in the

case of

XML an example is

>* and />* (literal tokens). These should always resolve to a

tag* parse token, so if you check for this token followed by anything else

you will trap a lot of errors.

# a fragment

pop;pop;

B">*",B"/>*" {

# error, the tag* token didn't resolve or reduce as

# it should have.

}

push;push;

Also, I realised that you can just create the error message and then print it with line number etc at the end of the error block. I now favour putting all errors in a big block just after the parse label (although EOF errors will probably still need to go at the end of the script). Also, 2 token error checking seems to be the most useful in general. Also, it’s a good idea to have a list of tokens at the start of your script, and then just look at them to see which ones can follow others.

I have been doing a lot of work on the nomlang.org site (where this file is) including writing a quite useful BASH script which manages this website. This site nomlang.org is now the sort-of “home” of the Pep & Nom system, or at least of all the documentation

While writing the primitive-but-good static site generator in BASH I also wrote a new NOM script to format the plain-text into HTML . This script is called eg/text.tohtml.pss and it works remarkably well as far as I can see. I actually started off with very humble aims for the script in fact I just wanted to do something like this

begin { add "<html><body>\n"; print; clear; }

until "\n";

replace "<" "<"; replace ">" ">"; replace "&" "&";

[:space:] { clear; add "<p>\n"; }

B"###" {

clop;clop;clop; put; clear;

add "<h3>"; get; add "</h3>\n";

}

B"##" {

clop;clop; put; clear;

add "<h3>"; get; add "</h2>\n";

}

B"#" {

clop; put; clear;

add "<h3>"; get; add "</h1>\n";

}

print; clear;

(eof) { add "</body></html>\n"; print; quit; }

In other words, it would just mark paragraphs and sort-of MARKDOWN headings. But it just grew and grew and it has been really successful because I can just add syntax to it willy-nilly and if it breaks I can easily fix it. So I will almost certainly never use eg/mark.html.pss again because it is hard to debug.

Wrote a nom script eg/bash.show.functions.pss

which prints bash functions in a file

and the comments above them.

I am revisiting this system after almost 3 years of not doing anything on it. It still seems like a remarkably new way of parsing and compiling and worth pursuing. I updated the website at nomlang.org and improved some example scripts (like bumble.sf.net/books/pars/eg/exp.tolisp.pss) and created a new text-to-html formatter bumble.sf.net/books/pars/eg/text.tohtml.pss which is much simpler to maintain than bumble.sf.net/books/pars/eg/mark.html.pss because it uses a less complex grammar.

Working on the mark.latex.pss script which now supports most

syntax including images. The script is quite complex. It should

be strait-forward to translate it to other targets such as

“markdown”,

html, man

GROFF etc.

Made a magical interpret() method in the perl translator which

will allow running of scripts.

Working on a simplified grammar for tr/translate.perl.pss which

I hope to use in all the translator scripts. So far so good. Also

introducing a new expression grammar for tests eg:

(B"a",B"b").E"z" { ... }

This allows mixing AND and OR logic in tests. Also, a nom script that extracts all unique tokens from a script would be useful.

Looking at

ANTLR example grammars, for ideas of simple languages

such as “logo”,

“abnf”,

BNF , “lambda”,

“tiny basic

” Reforming grammars of the translators, writing good “unescape

” and “escape” functions that actually walk and transform the

workspace string. Converting perl translator to a parse method

Need an “esc” command to change the escape char in all translators.

The perl translator is almost ready to be an interpreter.

Debugged the TCL translator- appears to be working well except for second generation scripts.

Current tasks: finish translators, perl/c++/rust/tcl

start translators: lisp/haskell/R (maybe)

Write a new command “until” with no arguments.(done in some translators)

Make the translators use a “run” or “parse” method, which

can read and write to a variety of sources.

Make the tape in object/pep.c dynamically allocated.

See if begin { ++; } create space for a variable. And use this

strategy for variable scope.

Starting to create date-lists in eg/mark.latex.pss to render lists

such as this one. Also, had the idea of a new test

F:file.txt:"int" { ... }

This would test if the file “file.txt” contain a line starting

with “int” and ending with “:” + workspace. This test would allow

checking variable types and declarations. It would also allow better

natural language parsing, because a list of nouns/adj/verbs etc

could be stored in a simple text file and looked up.

int.global:x

int.fn:x

string.global:name

string.local:name

etc

F:name.txt: { ... }

Would check the file name.txt for a line which begins with the tape

and ends with the workspace.

A lot of work on the Javascript translator tr/translate.js.pss

1st gen tests are working. Working on the rust translator and

the eg/sed.tojava.pss translator.

New ideas: create a lisp parser, create a brainf*** compiler

(done see: /eg/brainfork.c.pss )

create a “commonmark” markdown translator. This should be

not too hard, using the ideas in bumble.sf.net/books/pars/eg/mark.latex.pss

will create a 'date list' format for mark.latex.pss and mark.html.pss

Started a lisp parser eg/lisp.pss

Worked on eg/mark.latex.pss which is now producing

reasonable pdf output (from .tex via pdflatex). Also realised

that the accumulator could be used to simplify the grammar

by counting words.

Developed a

SED to java script, “eg/sed.tojava.pss” which has

progressed well. Still lacking branching commands and some other

gnu sed extensions.

Wrote a simple

SED parser and formatter/explainer at

eg/sed.parse.pss (commands a,i,c not parsed yet).

Some work on the javascript and perl translators.

Introducing an 'increment' method into the various machine classes in the target languages. This allows the 'tape' and 'marks' arrays to grow if required.

Looking at translation scripts. Changing tape and mark arrays to be dynamically growable in various target languages.

reviewing documentation, tidying.

Working on the pl/0 scripts. eg/plzero.pss and eg/plzero.ruby.pss

eg/plzero.pss now checks and formats a valid pl/0 program.

Working on the palindrome scripts eg/pal.words.pss and

eg/palindrome.pss . Both are working well and can be translated

to various languages (go, ruby, python, c, java)

I would like to add hyphen lists to mark.latex.pss and date

lists (such as this one)

Go translator now working well. I would like to write a translator for the Kotlin, R (the statistical language), swift rust. The script function pep.tt (in helpers.pars.txt) greatly helps debugging translation scripts.

More progress. A number of the translation scripts are now quite bug free and can be tested with the helper function pep.tt <langname> This script also tests 2nd generation script translation, which is very useful where the original pep engine is not available (for example, on a server).

Continuing work. Starting many translation scripts such as

tr/translate.cpp.pss and trying to debug and complete others.

working on tr/translate.c.pss good progress. simple scripts translating

and compiling and running. Did not eliminate dependencies so that

scripts need to be compiled with libmachine.a in the object/ folder.

tr/translate.ruby.pss

Should try to make a 'brew' package with ruby for pep.

gh.c to pep.c

Made pep look for asm.pp in the current folder or else in the

folder pointed to by the “ASMPP” environment variable.

Need to add “upper” lower and “cap” to the translation scripts

in pars/tr/

Things done:

gh.c files etc).

machine.interp.c (need to count preceding escape chars)

Need to fix the same in the translation scripts

pandoc guy to try to generate some interest in pep.

tcl translator

Have made some more good progress over the last few days. Modified the script bumble.sf.net/books/pars/eg/json.check.pss so that it recognises all JSON numbers (scientific etc)

Fixed /books/pars/tr/translate.py.pss so that it can translate scripts as

well as itself. Started to fix /books/pars/tr/translate.tcl.pss. Still

have an infinite loop when .restart is translated, and this is a general

problem with the “run-once” loop technique (for languages that don’t have

labelled loops or goto statements, for implementing .reparse and .restart).

The solution is a flag variable that gets set by .restart before the

parse> label (see translate.ruby.pss)

The script eg/mark.latex.pss is progressing well. It transforms a

markdown-ish format (like the current doc) into LaTeX. Need to do

lists/images/tables/dates

Having another look at this system. I still see enormous potential

for the system, but don’t know how to attract anyones attention!

I updated the eg/json.check.pss script to provide helpful

error messages with line+character numbers. Also, that script

incorporates the scientific number format (crockford) in

eg/json.number.pss. However, Crockfords grammer for scientific numbers

seems much stricter than what is often allowed by json parsers

such as the “jq” utility.

I became distracted by a bootable x86 forth stack-machine system

I was coding at /books/osdev/os.asm That was also interesting, and

I had the idea of somehow combining it with this. Hopefully these ideas

will come to fruition.

I think the best idea would be to edit the /books/pars/pars-book.txt

document, generate a pdf, print it out, and send it to someone

who might be interested. This parsing/compiling system is

revolutionary (I think), but nobody knows about it!!

I have not done any work on this project since about August 2020 but the idea remains interesting. Finishing the “translate.c.pss” script would be good (done: sept 2021), make “translate.go.pss” for a more modern audience (done: sept 2021).

Working on the script “translate.c.pss” to create c code from a pep script. I may try to eliminate dependency files and include all the required structures and functions in the script. That should facilitate converting the output to wide chars “wchar”.

bash script to test each script

translator (such as translate.tcl.pss translate.java.pss ....)

[done: the pep.tt function]

In the java translator , make the

parse/compile script a method of the class, with the input stream as a

parameter. So that the same method can be used to parse/compile a string, a

file, or [stdin], among other things. (note: not yet done: march 2025)

This technique can be used for any language but is easier with languages that support data-structures/classes/objects.

Continuing to work on the scripts translate.py.pss and translate.tcl.pss.

Had the idea to split the pars-book.txt into separate

MAN pages just like

the

TCL system “man 3tcl string” etc. (could generate man pages from

the command documentation at nomlang.org/doc/commands/ )

Made great progress on the script translate.java.pss which could become a template for a whole set of scripts for translating to other languages.

Continuing to work on translate.java.pss

Still need to convert the push pop code and test and debug.

Many methods have been in-lined and the Machine class code

is now in the script.

Rethinking the translation scripts bumble.sf.net/books/pars/tr/translate.java.pss and bumble.sf.net/books/pars/tr/translate.js.pss These scripts can be greatly simplified. I will remove all trivial methods from the Machine object and use the script to emit code instead. Hopefully translate.java.pss will become a template for other similar scripts. Also, I will include the Machine object within the script output so that there will be no dependency on external code.

Wrote the script /books/pars/eg/json.number.pss which parses

and checks numbers in json scientific format (Eg -0.00012e+012)

This script can be included in the script eg/json.parse.pss to

provide a reasonable complete json parser/checker.

Working on the script /books/pars/eg/mark.html.pss The script is working

reasonably well for transforming the pars-book.txt file into html.

It can be run with:

pep -f eg/mark.html.pss pars-book.txt > pars-book.html

Cleaning up the files in the /books/pars/ folder tree.

Renaming the executable to “pep” from “pp”.

I think “pep” will be

the tools definitive name.

I will rename the tool and executable to “pep” which would stand for “parsing” engine for patterns". I think it is a better name than “pp” and only seems to conflict with “python enhancement process” in the unix/linux world.

Wrote a substantial part of the script

/books/pars/eg/json.parse.pss which can parse and check the

json file format. However, the parser is incomplete because at

the moment it only accepts integer numbers. Recursive object

and array parsing is working.

I will try to improve the mark.html.pss “markdown” transform

script. I would still like to promote this parsing VM since

I think it is a good and original idea.

Did some work on mark.html.pss

Cleaned up memory leaks (with valgrind). Also some one-off errors and invalid read/writes. The double-free segmentation fault seems to be fixed. Still need to fix a couple of memory bugs in interpret() (one is in the UNTIL command).

Trying to clean up the pars-book.txt file which is the primary

documentation file for the project.

Posted on comp.compilers and comp.lang.c to see if anyone might

find this useful or interesting...

The implementation at bumble.sourceforge.net/books/pars/object has arrived at a usable beta stage (barring a segmentation fault when running big scripts).

Started the current implementation in the c language. I created a simple loop to test each new command as it was added to the machine, and this proved a successful strategy as it motivated me to keep going and debug as I went.

Wrote an incomplete c version of this machine called “chomski”.

Wrote incomplete versions in c++ and java. The java Machine object at

/books/pars/object.java/ got to a useful stage and will be a useful target

for a script, very similar to /books/pars/tr/translate.c.pss (and will be

called “translate.java.pss” ). This script creates compilable java code

using the java Machine object. In fact, we will be able to run this script

on itself (!). In other words we can run:

pep -f tr/translate.java.pss tr/translate.java.pssThe output will be compilable

java code that can compile any parse machine

script into compilable java code. Having this java system we are

able to use unicode characters in scripts.

It will be interesting to see how much slower the java version is.

Started to think about a tape/stack parsing machine.

The coding of this version was begun around mid-2014. A number of other versions have been written in the past but none was successful or complete.

Trying to get this to look for ASMPP env variable to find the “asm.pp ” file which it needs to actually compile and run scripts, here is a

printf("test\n");

const char* s = getenv("PATH");

printf("PATH :%s\n",(s!=NULL)? s : "getenv returned NULL");

printf("end test\n");

First new code for a while. I will add a switch that prints the stack when

.reparse is called. (note: no, we can just print the stack after the

parse> label with the stack and unstack commands) This

should help in debugging complex grammars (such as mark.html.pss or

newmark.pss) But it may be easier to add this to the compile.pss script

since .reparse is just a jump to the parse label.

Trying to use an environment variable to locate 'asm.pp'

Added stack and unstack commands. But they don't update

the tape pointer (yet).

Small adjustments to “compile.pss”.

Starting to rewrite compilable.c.pss to

convert back to a single class test and also convert to changes made to

compile.pss (eg negation and “ortestset*” compilation). This is a

maintainance problem trying to keep compile.pss and compilable.c.pss in sync

so that they recognise the same syntax. (note: the translation scripts don't

really need to use the grammar as the bumble.sf.net/books/pars/compile.pss compiler with

ortestset etc because they don't have to compile assembly-style

“jumps”.

)

# fragment.

# we use a leading or trailing comma to make a test*

# parse-token. This is sort-of "context parsing"

pop;pop;

"quoted*,*","class*,*",",*quoted*",",*class*" {

replace "quoted*" "test*"; replace "class*" "test*";

push; push; .reparse

}

pop;

"test*,*test*" {

push;push;push;

All the “,*” comma tokens above get confusing to look at when in a test with commas, so it could be better to actually make a “comma*” token.

Added the delim command which changes the stack token delimiter

for push and pop commands.

Rewrote quoteset parsing in bumble.sf.net/books/pars/compile.pss Much better now, doesn't

use “hops”.

Also, replaced bumble.sf.net/books/pars/asm.pp with an asm.pp generated

the nom compiler.

pep -f compile.pss compile.pss > asm.test.pp

This means that NOM is now self-hosting yaaaay. Thought it would be nice to have a javascript machine object ...

pep -f translate.js.pss translate.js.pss > pep.js